Read Parquet Pyspark

Read Parquet Pyspark - Web pyspark provides a simple way to read parquet files using the read.parquet () method. Web the pyspark sql package is imported into the environment to read and write data as a dataframe into parquet file. >>> >>> import tempfile >>> with tempfile.temporarydirectory() as d:. Web how to read parquet files under a directory using pyspark? I wrote the following codes. Pyspark read.parquet is a method provided in pyspark to read the data from. Web apache spark january 24, 2023 spread the love example of spark read & write parquet file in this tutorial, we will learn what is. Web similar to write, dataframereader provides parquet() function (spark.read.parquet) to read the parquet files from the amazon s3. Web pyspark comes with the function read.parquet used to read these types of parquet files from the given file. I have searched online and the solutions provided.

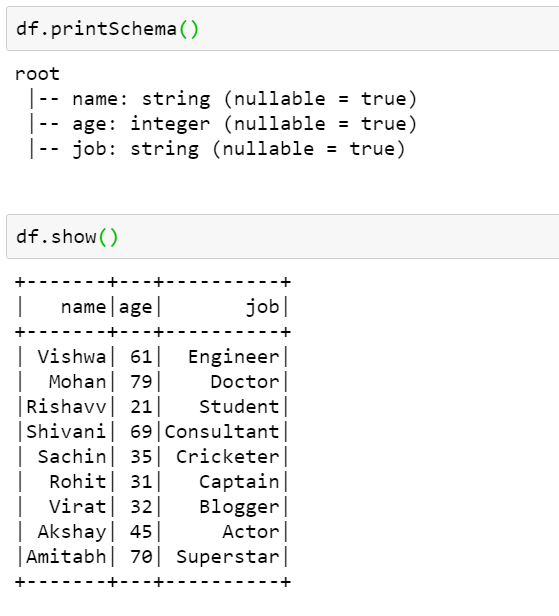

Pyspark read.parquet is a method provided in pyspark to read the data from. Web apache spark january 24, 2023 spread the love example of spark read & write parquet file in this tutorial, we will learn what is. Web 11 i am writing a parquet file from a spark dataframe the following way: Web i want to read a parquet file with pyspark. I wrote the following codes. Web pyspark comes with the function read.parquet used to read these types of parquet files from the given file. Web the pyspark sql package is imported into the environment to read and write data as a dataframe into parquet file. From pyspark.sql import sqlcontext sqlcontext. Web how to read parquet files under a directory using pyspark? Web write and read parquet files in python / spark.

Web write a dataframe into a parquet file and read it back. Web pyspark provides a simple way to read parquet files using the read.parquet () method. Parquet is columnar store format published by apache. Web similar to write, dataframereader provides parquet() function (spark.read.parquet) to read the parquet files from the amazon s3. Web how to read parquet files under a directory using pyspark? Web the pyspark sql package is imported into the environment to read and write data as a dataframe into parquet file. Web introduction to pyspark read parquet. >>> >>> import tempfile >>> with tempfile.temporarydirectory() as d:. Web pyspark comes with the function read.parquet used to read these types of parquet files from the given file. From pyspark.sql import sqlcontext sqlcontext.

How to read and write Parquet files in PySpark

Web dataframereader is the foundation for reading data in spark, it can be accessed via the attribute spark.read. Web i want to read a parquet file with pyspark. Pyspark read.parquet is a method provided in pyspark to read the data from. Web configuration parquet is a columnar format that is supported by many other data processing systems. Web pyspark comes.

[Solved] PySpark how to read in partitioning columns 9to5Answer

Web configuration parquet is a columnar format that is supported by many other data processing systems. >>> >>> import tempfile >>> with tempfile.temporarydirectory() as d:. Web write pyspark dataframe into specific number of parquet files in total across all partition columns to save a. Web write and read parquet files in python / spark. Web how to read parquet files.

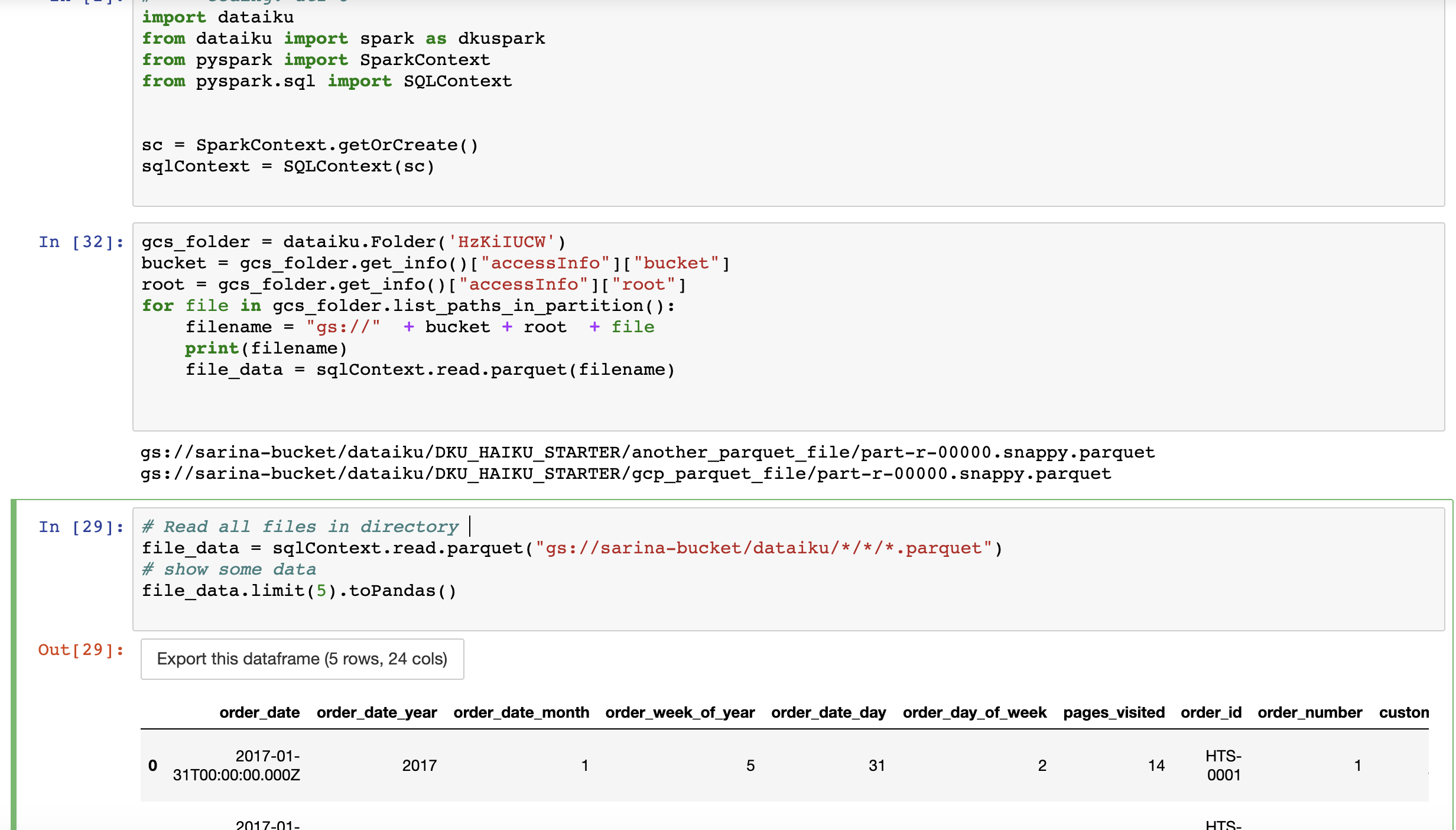

Solved How to read parquet file from GCS using pyspark? Dataiku

I have searched online and the solutions provided. Web configuration parquet is a columnar format that is supported by many other data processing systems. Pyspark read.parquet is a method provided in pyspark to read the data from. Web similar to write, dataframereader provides parquet() function (spark.read.parquet) to read the parquet files from the amazon s3. Web write pyspark dataframe into.

How to read Parquet files in PySpark Azure Databricks?

Web write a dataframe into a parquet file and read it back. Web how to read parquet files under a directory using pyspark? Web similar to write, dataframereader provides parquet() function (spark.read.parquet) to read the parquet files from the amazon s3. Web 11 i am writing a parquet file from a spark dataframe the following way: Web pyspark comes with.

How To Read Various File Formats In Pyspark Json Parquet Orc Avro Www

From pyspark.sql import sqlcontext sqlcontext. Web 11 i am writing a parquet file from a spark dataframe the following way: Web write a dataframe into a parquet file and read it back. Pyspark read.parquet is a method provided in pyspark to read the data from. I wrote the following codes.

How to read a Parquet file using PySpark

>>> >>> import tempfile >>> with tempfile.temporarydirectory() as d:. Web write pyspark dataframe into specific number of parquet files in total across all partition columns to save a. Web pyspark provides a simple way to read parquet files using the read.parquet () method. Web similar to write, dataframereader provides parquet() function (spark.read.parquet) to read the parquet files from the amazon.

How To Read A Parquet File Using Pyspark Vrogue

Web the pyspark sql package is imported into the environment to read and write data as a dataframe into parquet file. Web 11 i am writing a parquet file from a spark dataframe the following way: Web how to read parquet files under a directory using pyspark? Web configuration parquet is a columnar format that is supported by many other.

PySpark Read and Write Parquet File Spark by {Examples}

Web write and read parquet files in python / spark. Web 11 i am writing a parquet file from a spark dataframe the following way: >>> >>> import tempfile >>> with tempfile.temporarydirectory() as d:. Pyspark read.parquet is a method provided in pyspark to read the data from. Web configuration parquet is a columnar format that is supported by many other.

PySpark read parquet Learn the use of READ PARQUET in PySpark

Web write a dataframe into a parquet file and read it back. Parquet is columnar store format published by apache. Web write pyspark dataframe into specific number of parquet files in total across all partition columns to save a. Web configuration parquet is a columnar format that is supported by many other data processing systems. Web introduction to pyspark read.

How To Read A Parquet File Using Pyspark Vrogue

Web write and read parquet files in python / spark. Web introduction to pyspark read parquet. Web how to read parquet files under a directory using pyspark? From pyspark.sql import sqlcontext sqlcontext. Web 11 i am writing a parquet file from a spark dataframe the following way:

Web Write And Read Parquet Files In Python / Spark.

Parquet is columnar store format published by apache. Web apache spark january 24, 2023 spread the love example of spark read & write parquet file in this tutorial, we will learn what is. I wrote the following codes. Web the pyspark sql package is imported into the environment to read and write data as a dataframe into parquet file.

Web Write Pyspark Dataframe Into Specific Number Of Parquet Files In Total Across All Partition Columns To Save A.

>>> >>> import tempfile >>> with tempfile.temporarydirectory() as d:. Web similar to write, dataframereader provides parquet() function (spark.read.parquet) to read the parquet files from the amazon s3. Web pyspark comes with the function read.parquet used to read these types of parquet files from the given file. Web 11 i am writing a parquet file from a spark dataframe the following way:

Web How To Read Parquet Files Under A Directory Using Pyspark?

Web introduction to pyspark read parquet. Web configuration parquet is a columnar format that is supported by many other data processing systems. Pyspark read.parquet is a method provided in pyspark to read the data from. Web i want to read a parquet file with pyspark.

Web Pyspark Provides A Simple Way To Read Parquet Files Using The Read.parquet () Method.

Web write a dataframe into a parquet file and read it back. Web dataframereader is the foundation for reading data in spark, it can be accessed via the attribute spark.read. From pyspark.sql import sqlcontext sqlcontext. I have searched online and the solutions provided.